Memory Management

This is quite possibly the most important part of any Kernel. And rightfully so--all programs and data require it. As you know, in the Kernel, because we are still in Supervisor Mode (Ring 0), We have direct access to every byte in memory. This is very powerful, but also produces problems, espically in a multitasking evirement, where multiple programs and data require memory.

One of the primary problems we have to solve is: What do we do when we run out of memory?

Another problem is fragmentation. It is not always possible to load a file or program into a sequencal area of memory. For an example, lets say we have 2 programs loaded. One at 0x0, the other at 0x900. Both of these programs requested to load files, so we load the data files:

Notice what is happening here. There is alot of unused memory between all of these programs and files. Okay...What happens if we add a bigger file that is unable to fit in the above? This is when big problems arise with the current scheme. We cannot directly manipulate memory in any specific way, as it will currupt the currently executing programs and loaded files.

Then there is the problems of where each program is loaded at. Each program will be required to be Position Indipendent or provide relocation Tables. Without this, we will not know what base address the program is supposed to be loaded at.

Lets look at these deeper. Remember the ORG directive? This directive sets the location where your program is expected to load from. By loading the program at a different location, the program will refrence incorrect addresses, and will crash. We can easily test this theory. Right now, Stage2 expects to be loaded at 0x500. However, if we load it at 0x400 within Stage1 (While keeping the ORG 0x500 within Stage2), a triple fault will accure.

This adds on two new problems. How do we know where to load a program at? Coinsidering all we have is a binary image, we cannot know. However, if we make it standard that all programs begin at the same address--lets say, 0x0, then we can know. This would work--but is impossible to impliment if we plan to support multitasking. However, if we give each program there own memory space, that virtually begins at 0x0, this will work. After all, from each programs' perspective, they are all loaded at the same base address--even if they are different in the real (physical) memory.

What we need is some way to abstract the physical memory. Lets look closer.

Virtual Address Space (VAS)

A Virtual Address Space is a Program's Address Space. One needs to take note that this does not have to do with System Memory. The idea is so that each program has their own independent address space. This insures one program cannot access another program, because they are using a different address space.

Because VAS is Virtual and not directly used with the physical memory, it allows the use of other sources, such as disk drives, as if it was memory. That is, It allows us to use more memory then what is physically installed in the system.

This fixes the "Not enough memory" problem.

Also, as each program uses its own VAS, we can have each program always begin at base 0x0000:0000. This solves the relocation problems discussed ealier, as well as memory fragmentation--as we no longer need to worry about allocating continous physical blocks of memory for each program.

Virtual Addresses are mapped by the Kernel trough the MMU. More on this a little later.

Virtual Memory: Abstract

Virtual Memory is a special Memory Addressing Scheme implimented by both the hardware and software. It allows non contigous memory to act as if it was contigius memory.

Virtual Memory is based off the Virtual Address Space concepts. It provides every program its own Virtual Address Space, allowing memory protection, and decreasing memory fragmentation.

Virtual Memory also provides a way to indirectly use more memory then we actually have within the system. One common way of approching this is by using Page files, stored on a hard drive.

Virtual Memory needs to be mapped through a hardware device controller in order to work, as it is handled at the hardware level. This is normally done through the MMU, which we will look at later.

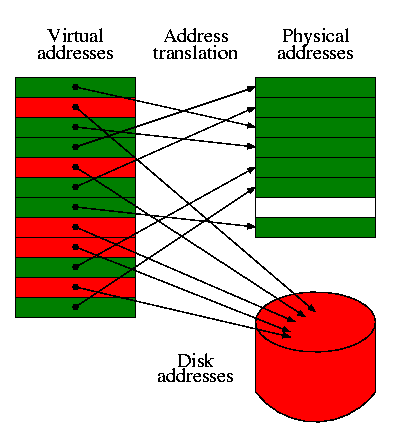

For an example of seeing virtual memory in use, lets look at it in action:

Notice what is going on here. Each memory block within the Virtual Addresses are linear. Each Memory Block is mapped to either it's location within the real physical RAM, or another device, such as a hard disk. The blocks are swapped between these devices as an as needed bases. This might seem slow, but it is very fast thanks to the MMU.

Remember: Each program will have its own Virtual Address Space--shown above. Because each address space is linear, and begins from 0x0000:00000, this immiedately fixes alot of the problems relating to memory fragmentation and program relocation issues.

Also, because Virtual Memory uses different devices in using memory blocks, it can easily manage more then the amount of memory within the system. i.e., If there is no more system memory, we can allocate blocks on the hard drive instead. If we run out of memory, we can either increase this page file on an as needed bases, or display a warning/error message,

Each memory "Block" is known as a Page, which is useually 4096 bytes in size.

Once again, we will cover everything in much detail later.

Memory Management Unit (MMU): Abstract

The MMU, Also known as Paged Memory Management Unit (PMMU) is a component inside the microprocessor responsible for the management of the memory requested by the CPU. It has a number of responsibilities, includingTranslating Virtual Addresses to Physical Addresses, Memory Protection, Cache Control, and more.

Segmentation: Abstract

Segmentation is a method of Memory Protection. In Segmentation, we only allocate a certain address space from the currently running program. This is done through the hardware registers.

Segmentation is one of the most widly used memory protection scheme. On the x86, it is useually handled by the segment registers: CS, SS, DS, and ES.

We have seen the use of this through Real Mode.

Paging: Abstract

THIS will be important to us. Paging is the process of managing program access to the virtual memory pages that are not in RAM. We will cover this alot more later.